Keras

: Layer로 이루어진 모델을 생성.

: layer간의 개별적인 parameter 운용이 가능.

- Keras Sequential API

keras.io/ko/models/sequential/

Sequential - Keras Documentation

Sequential 모델 API 시작하려면, 케라스 Sequential 모델 가이드를 읽어보십시오. Sequential 모델 메서드 compile compile(optimizer, loss=None, metrics=None, loss_weights=None, sample_weight_mode=None, weighted_metrics=None, target_te

keras.io

- Keras 기본 개념 및 모델링 순서

cafe.daum.net/flowlife/S2Ul/10

Daum 카페

cafe.daum.net

- activation function

선형회귀 : Linear : mse

이항분류 : step function/sigmoid function/Relu

다항분류 : softmax

layer : 병렬처리 node 구조

dense : layer 정의

sequential : hidden layer의 network 구조. 내부 relu + 종단 sigmoid or softmax

실제값과 예측값에 차이가 클 경우 feedback(역전파 - backpropagation)으로 모델 개선

실제값과 예측값이 완전히 같은 경우 overfitting 문제 발생.

- 역전파

m.blog.naver.com/samsjang/221033626685

[35편] 딥러닝의 핵심 개념 - 역전파(backpropagation) 이해하기1

1958년 퍼셉트론이 발표된 후 같은 해 7월 8일자 뉴욕타임즈는 앞으로 조만간 걷고, 말하고 자아를 인식하...

blog.naver.com

Keras 모듈로 논리회로 처리 모델(분류)

* ke1.py

import tensorflow as tf

import numpy as np

print(tf.keras.__version__)

1. 데이터 수집 및 가공

x = np.array([[0,0],[0,1],[1,0],[1,1]])

#y = np.array([0,1,1,1]) # or

#y = np.array([0,0,0,1]) # and

y = np.array([0,1,1,0]) # xor : node가 1인 경우 처리 불가

2. 모델 생성(네트워크 구성)

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Dense, Activation

model = Sequential([

Dense(input_dim =2, units=1),

Activation('sigmoid')

])

model = Sequential()

model.add(Dense(units=1, input_dim=2))

model.add(Activation('sigmoid'))

# input_dim : 입력층의 뉴런 수

# units : 출력 뉴런 수from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Dense, Activation

model = Sequential() : 네트워크 생성

model.add(함수) : 모델 속성 설정

Dense(units=, input_dim=) : Layer 정의

input_dim : 입력층의 뉴런 수

units : 출력 뉴런 수

init : 가중치 초기화 방법. uniform(균일분포)/normal(가우시안 분포)

Activation('수식명') : 활성함수 설정. linear(선형회귀)/sigmoid(이진분류)/softmax(다항분류)/relu(은닉층)

3. 모델 학습과정 설정

model.compile(optimizer='sgd', loss='binary_crossentropy', metrics=['accuracy'])

model.compile(optimizer='rmsprop', loss='binary_crossentropy', metrics=['accuracy'])

model.compile(optimizer='adam', loss='binary_crossentropy', metrics=['accuracy'])

from tensorflow.keras.optimizers import SGD, RMSprop, Adam

model.compile(optimizer=SGD(lr=0.01), loss='binary_crossentropy', metrics=['accuracy'])

model.compile(optimizer=SGD(lr=0.01, momentum=0.9), loss='binary_crossentropy', metrics=['accuracy'])

model.compile(optimizer=RMSprop(lr=0.01), loss='binary_crossentropy', metrics=['accuracy'])

model.compile(optimizer=Adam(lr=0.01), loss='binary_crossentropy', metrics=['accuracy'])from tensorflow.keras.optimizers import SGD, RMSprop, Adam

compile(optimizer=, loss='binary_crossentropy', metrics=['accuracy']) : 학습설정

SGD : 확률적 경사 하강법(Stochastic Gradient Descent)

RMSprop : Adagrad는 학습을 계속 진행한 경우에는, 나중에 가서는 학습률이 지나치게 떨어진다는 단점

Adam : Momentum과 RMSprop의 장점을 이용한 방법

lr : learning rate. 학습률.

momentum : 관성

4. 모델 학습

model.fit(x, y, epochs=1000, batch_size=1, verbose=1)model.fit(x, y, epochs=, batch_size=, verbose=) : 모델 학습

epochs : 학습횟수

batch_size : 가중치 갱신시 묶음 횟수, (가중치 갱신 횟수 = 데이터수 / batch size), 속도에 영향을 줌.

5. 모델평가

loss_metrics = model.evaluate(x, y)

print('loss_metrics :', loss_metrics)

# loss_metrics : [0.4869873821735382, 0.75]evaluate(feature, label) : 모델 성능평가

6. 예측값

pred = model.predict(x)

print('pred :\n', pred)

'''

[[0.36190987]

[0.85991323]

[0.8816227 ]

[0.98774564]]

'''

pred = (model.predict(x) > 0.5).astype('int32')

print('pred :\n', pred.flatten())

# [0 1 1 1]

7. 모델 저장

# 완벽한 모델이라 판단되면 모델을 저장

model.save('test.hdf5')

del model # 모델 삭제

from tensorflow.keras.models import load_model

model2 = load_model('test.hdf5')

pred2 = (model2.predict(x) > 0.5).astype('int32')

print('pred2 :\n', pred2.flatten())model.save('파일명.hdf5') : 모델 삭제

del model : 모델 삭제

from tensorflow.keras.models import load_model

model = load_model('파일명.hdf5') : 모델 불러오기

논리 게이트 XOR 해결을 위해 Node 추가

* ke2.py

import tensorflow as tf

import numpy as np

# 1. 데이터 수집 및 가공

x = np.array([[0,0],[0,1],[1,0],[1,1]])

y = np.array([0,1,1,0]) # xor : node가 1인 경우 처리 불가

# 2. 모델 생성(네트워크 구성)

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Dense, Activation

model = Sequential()

model.add(Dense(units=5, input_dim=2))

model.add(Activation('relu'))

model.add(Dense(units=5))

model.add(Activation('relu'))

model.add(Dense(units=1))

model.add(Activation('sigmoid'))

model.add(Dense(units=5, input_dim=2, activation='relu'))

model.add(Dense(5, activation='relu' ))

model.add(Dense(1, activation='sigmoid'))

# 모델 파라미터 확인

print(model.summary())

'''

Layer (type) Output Shape Param #

=================================================================

dense (Dense) (None, 5) 15

_________________________________________________________________

activation (Activation) (None, 5) 0

_________________________________________________________________

dense_1 (Dense) (None, 5) 30

_________________________________________________________________

activation_1 (Activation) (None, 5) 0

_________________________________________________________________

dense_2 (Dense) (None, 1) 6

_________________________________________________________________

activation_2 (Activation) (None, 1) 0

=================================================================

Total params: 51

Trainable params: 51

Non-trainable params: 0

'''=> Param : (2+1) * 5 = 15 -> (5+1) * 5 = 30 -> (5+1)*1 = 6

: (input_dim + 1) * units

=> Total params : 15 + 30 + 6 = 51

# 3. 모델 학습과정 설정

model.compile(optimizer=Adam(0.01), loss='binary_crossentropy', metrics=['accuracy'])

# 4. 모델 학습

history = model.fit(x, y, epochs=100, batch_size=1, verbose=1)

# 5. 모델 성능 평가

loss_metrics = model.evaluate(x, y)

print('loss_metrics :', loss_metrics) # loss_metrics : [0.13949958980083466, 1.0]

pred = (model.predict(x) > 0.5).astype('int32')

print('pred :\n', pred.flatten())

print('------------')

print(model.input)

print(model.output)

print(model.weights) # kernel(가중치), bias 값 확인.

print('------------')

print(history.history['loss']) # 학습 중의 데이터 확인

print(history.history['accuracy'])

# 모델학습 시 발생하는 loss 값 시각화

import matplotlib.pyplot as plt

plt.plot(history.history['loss'], label='train loss')

plt.xlabel('epochs')

plt.show()

import pandas as pd

pd.DataFrame(history.history).plot(figsize=(8, 5))

plt.show()

- 시뮬레이션

Tensorflow — Neural Network Playground

Tinker with a real neural network right here in your browser.

playground.tensorflow.org

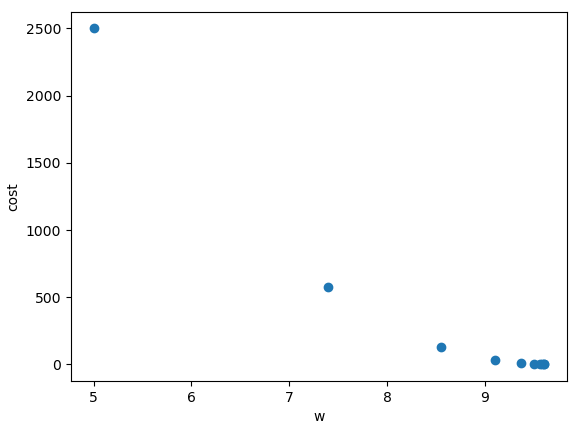

cost function

: cost(loss, 손실)가 최소가 되는 weight 값 찾기

* ke3.py

import tensorflow as tf

import matplotlib.pyplot as plt

x = [1, 2, 3]

y = [1, 2, 3]

b = 0

w = 1

hypothesis = x * w + b # 예측값

cost = tf.reduce_sum(tf.pow(hypothesis - y, 2)) / len(x)

w_val = []

cost_val = []

for i in range(-30, 50):

feed_w = i * 0.1 # 0.1 : learning rate(학습률)

hypothesis = tf.multiply(feed_w, x) + b

cost = tf.reduce_mean(tf.square(hypothesis - y))

cost_val.append(cost)

w_val.append(feed_w)

print(str(i) + ' ' + ', cost:' + str(cost.numpy()) + ', w:', str(feed_w))

plt.plot(w_val, cost_val)

plt.xlabel('w')

plt.ylabel('cost')

plt.show()

Gradient Tape()을 이용한 최적의 w 얻기

: 경사하강법으로 cost를 최소화

* ke4.py

# 단순 선형회귀 예측 모형 작성

# x = 5일 때 f(x) = 50에 가까워지는 w 값 찾기

import tensorflow as tf

import numpy as np

x = tf.Variable(5.0)

w = tf.Variable(0.0)@tf.function

def train_step():

with tf.GradientTape() as tape: # 자동 미분을 위한 API 제공

#print(tape.watch(w))

y = tf.multiply(w, x) + 0

loss = tf.square(tf.subtract(y, 50)) # (예상값 - 실제값)의 제곱

grad = tape.gradient(loss, w) # 자동 미분

mu = 0.01 # 학습율

w.assign_sub(mu * grad)

return loss

for i in range(10):

loss = train_step()

print('{:1}, w:{:4.3}, loss:{:4.5}'.format(i, w.numpy(), loss.numpy()))

'''

0, w: 5.0, loss:2500.0

1, w: 7.5, loss:625.0

2, w:8.75, loss:156.25

3, w:9.38, loss:39.062

4, w:9.69, loss:9.7656

5, w:9.84, loss:2.4414

6, w:9.92, loss:0.61035

7, w:9.96, loss:0.15259

8, w:9.98, loss:0.038147

9, w:9.99, loss:0.0095367

'''tf.GradientTape() :

gradient(loss, w) : 자동미분

# 옵티마이저 객체 사용

opti = tf.keras.optimizers.SGD()

x = tf.Variable(5.0)

w = tf.Variable(0.0)

@tf.function

def train_step2():

with tf.GradientTape() as tape: # 자동 미분을 위한 API 제공

y = tf.multiply(w, x) + 0

loss = tf.square(tf.subtract(y, 50)) # (예상값 - 실제값)의 제곱

grad = tape.gradient(loss, w) # 자동 미분

opti.apply_gradients([(grad, w)])

return loss

for i in range(10):

loss = train_step2()

print('{:1}, w:{:4.3}, loss:{:4.5}'.format(i, w.numpy(), loss.numpy()))opti = tf.keras.optimizers.SGD() :

opti.apply_gradients([(grad, w)]) :

# 최적의 기울기, y절편 구하기

opti = tf.keras.optimizers.SGD()

x = tf.Variable(5.0)

w = tf.Variable(0.0)

b = tf.Variable(0.0)

@tf.function

def train_step3():

with tf.GradientTape() as tape: # 자동 미분을 위한 API 제공

#y = tf.multiply(w, x) + b

y = tf.add(tf.multiply(w, x), b)

loss = tf.square(tf.subtract(y, 50)) # (예상값 - 실제값)의 제곱

grad = tape.gradient(loss, [w, b]) # 자동 미분

opti.apply_gradients(zip(grad, [w, b]))

return loss

w_val = [] # 시각화 목적으로 사용

cost_val = []

for i in range(10):

loss = train_step3()

print('{:1}, w:{:4.3}, loss:{:4.5}, b:{:4.3}'.format(i, w.numpy(), loss.numpy(), b.numpy()))

w_val.append(w.numpy())

cost_val.append(loss.numpy())

'''

0, w: 5.0, loss:2500.0, b: 1.0

1, w: 7.4, loss:576.0, b:1.48

2, w:8.55, loss:132.71, b:1.71

3, w: 9.1, loss:30.576, b:1.82

4, w:9.37, loss:7.0448, b:1.87

5, w: 9.5, loss:1.6231, b: 1.9

6, w:9.56, loss:0.37397, b:1.91

7, w:9.59, loss:0.086163, b:1.92

8, w: 9.6, loss:0.019853, b:1.92

9, w:9.61, loss:0.0045738, b:1.92

'''

import matplotlib.pyplot as plt

plt.plot(w_val, cost_val, 'o')

plt.xlabel('w')

plt.ylabel('cost')

plt.show()

# 선형회귀 모델작성

opti = tf.keras.optimizers.SGD()

w = tf.Variable(tf.random.normal((1,)))

b = tf.Variable(tf.random.normal((1,)))

@tf.function

def train_step4(x, y):

with tf.GradientTape() as tape: # 자동 미분을 위한 API 제공

hypo = tf.add(tf.multiply(w, x), b)

loss = tf.reduce_mean(tf.square(tf.subtract(hypo, y))) # (예상값 - 실제값)의 제곱

grad = tape.gradient(loss, [w, b]) # 자동 미분

opti.apply_gradients(zip(grad, [w, b]))

return loss

x = [1., 2., 3., 4., 5.] # feature

y = [1.2, 2.0, 3.0, 3.5, 5.5] # label

w_vals = [] # 시각화 목적으로 사용

loss_vals = []

for i in range(100):

loss_val = train_step4(x, y)

loss_vals.append(loss_val.numpy())

if i % 10 ==0:

print(loss_val)

w_vals.append(w.numpy())

print('loss_vals :', loss_vals)

print('w_vals :', w_vals)

# loss_vals : [2.457926, 1.4767673, 0.904997, 0.57179654, 0.37762302, 0.26446754, 0.19852567, 0.16009742, 0.13770261, 0.12465141, 0.1170452, 0.112612054, 0.11002797, 0.10852148, 0.10764296, 0.10713041, 0.10683115, 0.10665612, 0.10655358, 0.10649315, 0.10645743, 0.10643599, 0.106422946, 0.10641475, 0.10640935, 0.10640564, 0.10640299, 0.10640085, 0.106398985, 0.106397435, 0.10639594, 0.10639451, 0.10639312, 0.10639181, 0.10639049, 0.10638924, 0.1063879, 0.10638668, 0.10638543, 0.10638411, 0.10638293, 0.1063817, 0.10638044, 0.10637925, 0.106378004, 0.10637681, 0.10637561, 0.106374465, 0.10637329, 0.10637212, 0.10637095, 0.10636979, 0.10636864, 0.10636745, 0.10636636, 0.10636526, 0.10636415, 0.10636302, 0.10636191, 0.10636077, 0.10635972, 0.10635866, 0.10635759, 0.10635649, 0.1063555, 0.10635439, 0.10635338, 0.10635233, 0.10635128, 0.106350325, 0.106349275, 0.1063483, 0.106347285, 0.10634627, 0.10634525, 0.10634433, 0.10634329, 0.10634241, 0.10634136, 0.10634048, 0.10633947, 0.106338575, 0.10633757, 0.10633665, 0.10633578, 0.10633484, 0.10633397, 0.106333, 0.10633211, 0.10633123, 0.106330395, 0.10632948, 0.10632862, 0.1063277, 0.10632684, 0.10632604, 0.10632517, 0.10632436, 0.106323466, 0.10632266]

# w_vals : [array([1.3279629], dtype=float32), array([1.2503898], dtype=float32), array([1.1911799], dtype=float32), array([1.145988], dtype=float32), array([1.1114972], dtype=float32), array([1.0851754], dtype=float32), array([1.0650897], dtype=float32), array([1.0497644], dtype=float32), array([1.0380731], dtype=float32), array([1.0291559], dtype=float32), array([1.0223563], dtype=float32), array([1.0171733], dtype=float32), array([1.0132244], dtype=float32), array([1.0102174], dtype=float32), array([1.0079296], dtype=float32), array([1.0061907], dtype=float32), array([1.0048708], dtype=float32), array([1.0038706], dtype=float32), array([1.0031146], dtype=float32), array([1.002545], dtype=float32), array([1.0021176], dtype=float32), array([1.0017987], dtype=float32), array([1.0015627], dtype=float32), array([1.0013899], dtype=float32), array([1.0012653], dtype=float32), array([1.0011774], dtype=float32), array([1.0011177], dtype=float32), array([1.0010793], dtype=float32), array([1.0010573], dtype=float32), array([1.0010476], dtype=float32), array([1.0010475], dtype=float32), array([1.0010545], dtype=float32), array([1.001067], dtype=float32), array([1.0010837], dtype=float32), array([1.0011035], dtype=float32), array([1.0011257], dtype=float32), array([1.0011497], dtype=float32), array([1.001175], dtype=float32), array([1.0012014], dtype=float32), array([1.0012285], dtype=float32), array([1.0012561], dtype=float32), array([1.0012841], dtype=float32), array([1.0013124], dtype=float32), array([1.0013409], dtype=float32), array([1.0013695], dtype=float32), array([1.0013981], dtype=float32), array([1.0014268], dtype=float32), array([1.0014554], dtype=float32), array([1.001484], dtype=float32), array([1.0015126], dtype=float32), array([1.0015413], dtype=float32), array([1.0015697], dtype=float32), array([1.0015981], dtype=float32), array([1.0016265], dtype=float32), array([1.0016547], dtype=float32), array([1.0016829], dtype=float32), array([1.001711], dtype=float32), array([1.001739], dtype=float32), array([1.0017669], dtype=float32), array([1.0017947], dtype=float32), array([1.0018225], dtype=float32), array([1.0018501], dtype=float32), array([1.0018777], dtype=float32), array([1.0019051], dtype=float32), array([1.0019325], dtype=float32), array([1.0019598], dtype=float32), array([1.001987], dtype=float32), array([1.002014], dtype=float32), array([1.0020411], dtype=float32), array([1.002068], dtype=float32), array([1.0020949], dtype=float32), array([1.0021216], dtype=float32), array([1.0021482], dtype=float32), array([1.0021747], dtype=float32), array([1.0022012], dtype=float32), array([1.0022275], dtype=float32), array([1.0022538], dtype=float32), array([1.00228], dtype=float32), array([1.0023061], dtype=float32), array([1.0023321], dtype=float32), array([1.0023581], dtype=float32), array([1.002384], dtype=float32), array([1.0024097], dtype=float32), array([1.0024353], dtype=float32), array([1.002461], dtype=float32), array([1.0024865], dtype=float32), array([1.0025119], dtype=float32), array([1.0025371], dtype=float32), array([1.0025624], dtype=float32), array([1.0025876], dtype=float32), array([1.0026126], dtype=float32), array([1.0026375], dtype=float32), array([1.0026624], dtype=float32), array([1.0026872], dtype=float32), array([1.0027119], dtype=float32), array([1.0027366], dtype=float32), array([1.0027611], dtype=float32), array([1.0027856], dtype=float32), array([1.00281], dtype=float32), array([1.0028343], dtype=float32)]

plt.plot(w_vals, loss_vals, 'o--')

plt.xlabel('w')

plt.ylabel('cost')

plt.show()

# 선형회귀선 시각화

y_pred = tf.multiply(x, w) + b # 모델 완성

print('y_pred :', y_pred.numpy())

plt.plot(x, y, 'ro')

plt.plot(x, y_pred, 'b--')

plt.show()

tf 1.x 와 2.x : 단순선형회귀/로지스틱회귀 소스 코드

cafe.daum.net/flowlife/S2Ul/17

Daum 카페

cafe.daum.net

tensorflow 1.x 사용

단순선형회귀 - 경사하강법 함수 사용 1.x

* ke5_tensorflow1.py

import tensorflow.compat.v1 as tf # tensorflow 1.x 소스 실행 시

tf.disable_v2_behavior() # tensorflow 1.x 소스 실행 시

import matplotlib.pyplot as plt

x_data = [1.,2.,3.,4.,5.]

y_data = [1.2,2.0,3.0,3.5,5.5]

x = tf.placeholder(tf.float32)

y = tf.placeholder(tf.float32)

w = tf.Variable(tf.random_normal([1]))

b = tf.Variable(tf.random_normal([1]))

hypothesis = x * w + b

cost = tf.reduce_mean(tf.square(hypothesis - y))

print('\n경사하강법 메소드 사용------------')

optimizer = tf.train.GradientDescentOptimizer(learning_rate=0.01)

train = optimizer.minimize(cost)

sess = tf.Session() # Launch the graph in a session.

sess.run(tf.global_variables_initializer())

w_val = []

cost_val = []

for i in range(501):

_, curr_cost, curr_w, curr_b = sess.run([train, cost, w, b], {x:x_data, y:y_data})

w_val.append(curr_w)

cost_val.append(curr_cost)

if i % 10 == 0:

print(str(i) + ' cost:' + str(curr_cost) + ' weight:' + str(curr_w) +' b:' + str(curr_b))

plt.plot(w_val, cost_val)

plt.xlabel('w')

plt.ylabel('cost')

plt.show()

print('--회귀분석 모델로 Y 값 예측------------------')

print(sess.run(hypothesis, feed_dict={x:[5]})) # [5.0563836]

print(sess.run(hypothesis, feed_dict={x:[2.5]})) # [2.5046895]

print(sess.run(hypothesis, feed_dict={x:[1.5, 3.3]})) # [1.4840119 3.3212316]

선형회귀분석 기본 - Keras 사용 2.x

* ke5_tensorflow2.py

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Dense

from tensorflow.keras import optimizers

x_data = [1.,2.,3.,4.,5.]

y_data = [1.2,2.0,3.0,3.5,5.5]

model=Sequential() # 계층구조(Linear layer stack)를 이루는 모델을 정의

model.add(Dense(1, input_dim=1, activation='linear'))

# activation function의 종류 : https://www.tensorflow.org/versions/r2.0/api_docs/python/tf/keras/activations

sgd=optimizers.SGD(lr=0.01) # 학습률(learning rate, lr)은 0.01

model.compile(optimizer=sgd, loss='mse',metrics=['mse'])

lossmetrics = model.evaluate(x_data,y_data)

print(lossmetrics)

# 옵티마이저는 경사하강법의 일종인 확률적 경사 하강법 sgd를 사용.

# 손실 함수(Loss function)은 평균제곱오차 mse를 사용.

# 주어진 X와 y데이터에 대해서 오차를 최소화하는 작업을 100번 시도.

model.fit(x_data, y_data, batch_size=1, epochs=100, shuffle=False, verbose=2)

from sklearn.metrics import r2_score

print('설명력 : ', r2_score(y_data, model.predict(x_data)))

print('예상 수 : ', model.predict([5])) # [[4.801656]]

print('예상 수 : ', model.predict([2.5])) # [[2.490468]]

print('예상 수 : ', model.predict([1.5, 3.3])) # [[1.565993][3.230048]]단순선형모델 작성

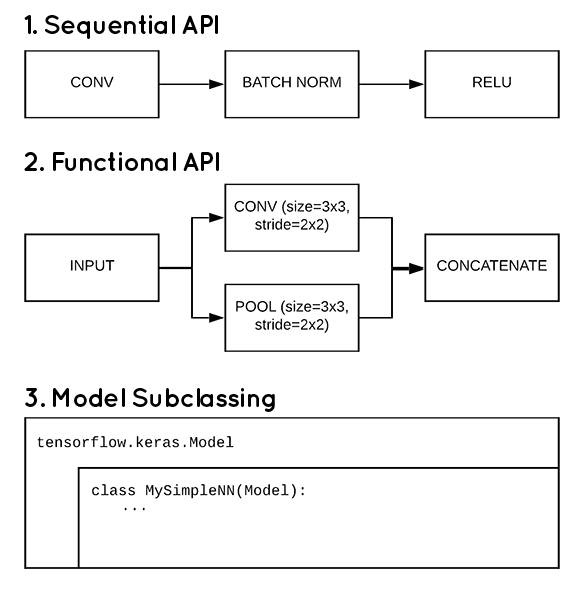

keras model 작성방법 3가지 / 최적모델 찾기

cafe.daum.net/flowlife/S2Ul/22

Daum 카페

cafe.daum.net

- 공부시간에 따른 성적 결과 예측 - 모델 작성방법 3가지

* ke6_regression.py

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Dense

from tensorflow.keras import optimizers

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

import tensorflow as tf

x_data = np.array([1,2,3,4,5], dtype=np.float32) # feature

y_data = np.array([11,32,53,64,70], dtype=np.float32) # label

print(np.corrcoef(x_data, y_data)) # 0.9743547 인과관계가 있다고 가정

- 방법 1 : Sequential API 사용 - 여러개의 층을 순서대로 쌓아올린 완전 연결모델

model = Sequential()

model.add(Dense(units=1, input_dim=1, activation='linear'))

model.add(Dense(units=1, activation='linear'))

print(model.summary())opti = optimizers.Adam(lr=0.01)

model.compile(optimizer=opti, loss='mse', metrics=['mse'])

model.fit(x=x_data, y=y_data, batch_size=1, epochs=100, verbose=1)

loss_metrics = model.evaluate(x=x_data, y=y_data)

print('loss_metrics: ', loss_metrics)

# loss_metrics: [61.95122146606445, 61.95122146606445]

from sklearn.metrics import r2_score

print('설명력 : ', r2_score(y_data, model.predict(x_data))) # 설명력 : 0.8693012272129582

print('실제값 : ', y_data) # 실제값 : [11. 32. 53. 64. 70.]

print('예측값 : ', model.predict(x_data).flatten()) # 예측값 : [26.136082 36.97163 47.807175 58.642727 69.478264]

print('예상점수 : ', model.predict([0.5, 3.45, 6.7]).flatten())

# 예상점수 : [22.367954 50.166172 80.79132 ]

plt.plot(x_data, model.predict(x_data), 'b', x_data, y_data, 'ko')

plt.show()

- 방법 2 : funcion API 사용 - Sequential API보다 유연한 모델을 작성

from tensorflow.keras.layers import Input

from tensorflow.keras.models import Model

inputs = Input(shape=(1, )) # input layer 생성

output1 = Dense(2, activation='linear')(inputs)

output2 = Dense(1, activation='linear')(output1)

model2 = Model(inputs, output2)from tensorflow.keras.layers import Input

from tensorflow.keras.models import Model

input = Input(shape=(입력수, )) : input layer 생성

output = Dense(출력수, activation='linear')(input) : output 연결

model2 = Model(input, output) : 모델 생성

opti = optimizers.Adam(lr=0.01)

model2.compile(optimizer=opti, loss='mse', metrics=['mse'])

model2.fit(x=x_data, y=y_data, batch_size=1, epochs=100, verbose=1)

loss_metrics = model2.evaluate(x=x_data, y=y_data)

print('loss_metrics: ', loss_metrics) # loss_metrics: [46.31613540649414, 46.31613540649414]

print('설명력 : ', r2_score(y_data, model2.predict(x_data))) # 설명력 : 0.8923131851204337

- 방법 3 : Model subclassing API 사용 - 동적인 모델을 작성

class MyModel(Model):

def __init__(self): # 생성자

super(MyModel, self).__init__()

self.d1 = Dense(2, activation='linear') # layer 생성

self.d2 = Dense(1, activation='linear')

def call(self, x): # 모델.fit()에서 호출

x = self.d1(x)

return self.d2(x)

model3 = MyModel() # init 호출opti = optimizers.Adam(lr=0.01)

model3.compile(optimizer=opti, loss='mse', metrics=['mse'])

model3.fit(x=x_data, y=y_data, batch_size=1, epochs=100, verbose=1)

loss_metrics = model3.evaluate(x=x_data, y=y_data)

print('loss_metrics: ', loss_metrics) # loss_metrics: [41.4090576171875, 41.4090576171875]

print('설명력 : ', r2_score(y_data, model3.predict(x_data))) # 설명력 : 0.9126391191522784다중 선형회귀 모델 + 텐서보드(모델의 구조 및 학습과정/결과를 시각화)

5명의 3번 시험 점수로 다음 시험점수 예측

* ke7.py

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Dense

from tensorflow.keras import optimizers

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

import tensorflow as tf

# 데이터 수집

x_data = np.array([[70, 85, 80], [71, 89, 78], [50, 80, 60], [66, 20, 60], [50, 30, 10]])

y_data = np.array([73, 82, 72, 57, 34])

# Sequential API 사용

# 모델생성

model = Sequential()

#model.add(Dense(1, input_dim=3, activation='linear'))

# 모델 설정

model.add(Dense(6, input_dim=3, activation='linear', name='a'))

model.add(Dense(3, activation='linear', name='b'))

model.add(Dense(1, activation='linear', name='c'))

print(model.summary())

# 학습설정

opti = optimizers.Adam(lr=0.01)

model.compile(optimizer=opti, loss='mse', metrics=['mse'])

history = model.fit(x_data, y_data, batch_size=1, epochs=30, verbose=2)

# 시각화

plt.plot(history.history['loss'])

plt.xlabel('epochs')

plt.ylabel('loss')

plt.show()

# 모델 평가

loss_metrics = model.evaluate(x=x_data, y=y_data)

from sklearn.metrics import r2_score

print('loss_metrics: ', loss_metrics)

print('설명력 : ', r2_score(y_data, model.predict(x_data)))

# 설명력 : 0.7680899501992267

print('예측값 :', model.predict(x_data).flatten())

# 예측값 : [84.357574 83.79331 66.111855 57.75085 21.302818]# funcion API 사용

from tensorflow.keras.layers import Input

from tensorflow.keras.models import Model

inputs = Input(shape=(3,))

output1 = Dense(6, activation='linear', name='a')(inputs)

output2 = Dense(3, activation='linear', name='b')(output1)

output3 = Dense(1, activation='linear', name='c')(output2)

linaer_model = Model(inputs, output3)

print(linaer_model.summary())

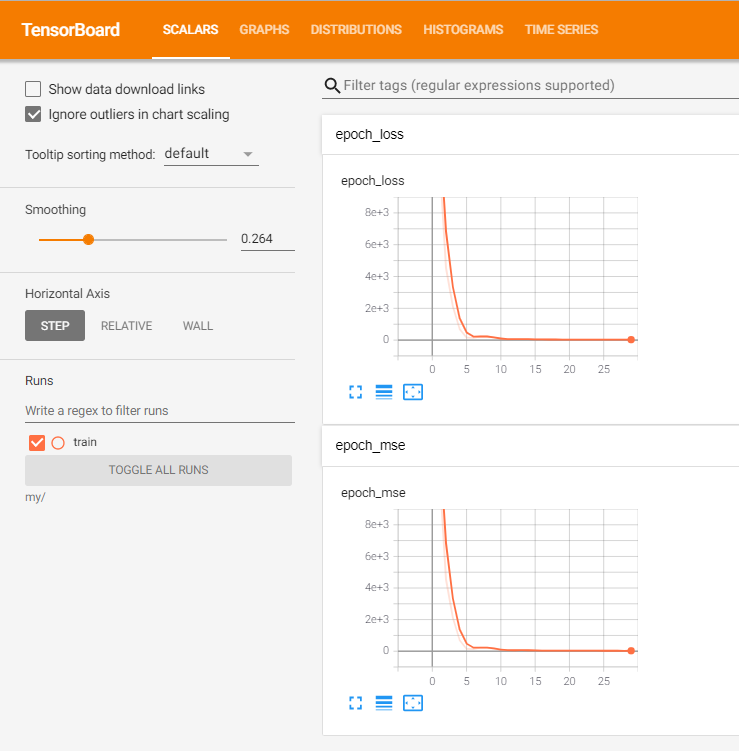

- TensorBoard : 알고리즘에 대한 동작을 확인하여 시행착오를 최소화

from tensorflow.keras.callbacks import TensorBoard

tb = TensorBoard(log_dir ='.\\my',

histogram_freq=True,

write_graph=True,

write_images=False)

# 학습설정

opti = optimizers.Adam(lr=0.01)

linear_model.compile(optimizer=opti, loss='mse', metrics=['mse'])

history = linear_model.fit(x_data, y_data, batch_size=1, epochs=30, verbose=2,\

callbacks = [tb])

# 모델 평가

loss_metrics = linear_model.evaluate(x=x_data, y=y_data)

from sklearn.metrics import r2_score

print('loss_metrics: ', loss_metrics)

# loss_metrics: [26.276317596435547, 26.276317596435547]

print('설명력 : ', r2_score(y_data, linear_model.predict(x_data)))

# 설명력 : 0.9072950307860612

print('예측값 :', linear_model.predict(x_data).flatten())

# 예측값 : [80.09034 80.80026 63.217213 55.48591 33.510746]

# 새로운 값 예측

x_new = np.array([[50, 55, 50], [91, 99, 98]])

print('예상점수 :', linear_model.predict(x_new).flatten())

# 예상점수 : [53.61225 98.894615]from tensorflow.keras.callbacks import TensorBoard

tb = TensorBoard(log_dir ='', histogram_freq=, write_graph=, write_images=) :

log_dir : 로그 경로 설정

histogram_freq : 히스토그램 표시

wrtie_graph : 그래프 그리기

write_images : 실행도중 사용 이미지 유무

model.fit(x, y, batch_size=, epochs=, verbose=, callbacks = [tb]) :

- TensorBoard의 결과확인은 cmd창에서 확인한다.

cd C:\work\psou\pro4\tf_test2

tensorboard --logdir my/TensorBoard 2.4.1 at http://localhost:6006/ (Press CTRL+C to quit)

=> http://localhost:6006/ 접속

- TensorBoard 사용방법

텐서보드 사용법

TensorBoard는 TensorFlow에 기록된 로그를 그래프로 시각화시켜서 보여주는 도구다. 1. TensorBoard 실행 tensorboard --logdir=/tmp/sample 루트(/) 폴더 밑의 tmp 폴더 밑의 sample 폴더에 기록된 로그를 보겠..

pythonkim.tistory.com

정규화/표준화

: 데이터 간에 단위에 차이가 큰 경우

- scaler 종류

Scaler 의 종류

https://mkjjo.github.io/python/2019/01/10/scaler.html 스케일링의 종류 Scikit-Learn에서는 다양한 종류의 스케일러를 제공하고 있다. 그중 대표적인 기법들이다. 종류 설명 1 StandardScaler 기본 스케일. 평..

zereight.tistory.com

| StandardScaler | 기본 스케일. 평균과 표준편차 사용 |

| MinMaxScaler | 최대/최소값이 각각 1, 0이 되도록 스케일링 |

| MaxAbsScaler | 최대절대값과 0이 각각 1, 0이 되도록 스케일링 |

| RobustScaler | 중앙값(median)과 IQR(interquartile range) 사용. 아웃라이어의 영향을 최소화 |

* ke8_scaler.py

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Dense

from tensorflow.keras import optimizers

from tensorflow.keras.optimizers import SGD, RMSprop, Adam

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

import tensorflow as tf

from sklearn.preprocessing import MinMaxScaler, minmax_scale, StandardScaler, RobustScaler

data = pd.read_csv('https://raw.githubusercontent.com/pykwon/python/master/testdata_utf8/Advertising.csv')

del data['no']

print(data.head(2))

'''

tv radio newspaper sales

0 230.1 37.8 69.2 22.1

1 44.5 39.3 45.1 10.4

'''

# 정규화 : 0 ~ 1사이로 표현

xy = minmax_scale(data, axis=0, copy=True)

print(xy[:2])

# [[0.77578627 0.76209677 0.60598065 0.80708661]

# [0.1481231 0.79233871 0.39401935 0.34645669]]from sklearn.preprocessing import MinMaxScaler, minmax_scale, StandardScaler, RobustScaler

minmax_scale(data, axis=, copy=) : 정규화

# train/test : 과적합 방지

from sklearn.model_selection import train_test_split

x_train, x_test, y_train, y_test = train_test_split(xy[:, :-1], xy[:, -1], \

test_size=0.3, random_state=123)

print(x_train[:2], x_train.shape) # tv, radio, newpaper

print(x_test[:2], x_test.shape)

print(y_train[:2], y_train.shape) # sales

print(y_test[:2], y_test.shape)

'''

[[0.80858979 0.08266129 0.32189974]

[0.30334799 0.00604839 0.20140721]] (140, 3)

[[0.67331755 0.0625 0.30167106]

[0.26885357 0. 0.07827617]] (60, 3)

[0.42125984 0.27952756] (140,)

[0.38582677 0.28346457] (60,)# 모델 생성

model = Sequential()

model.add(Dense(1, input_dim =3)) # 레이어 1개

model.add(Activation('linear'))

model.add(Dense(1, input_dim =3, activation='linear'))

print(model.summary())

tf.keras.utils.plot_model(model,'abc.png')tf.keras.utils.plot_model(model,'파일명') : 레이어 도식화하여 파일 저장.

# 학습설정

model.compile(optimizer=Adam(0.01), loss='mse', metrics=['mse'])

history = model.fit(x_train, y_train, batch_size=32, epochs=100, verbose=1,\

validation_split = 0.2) # train data를 8:2로 분리해서 학습도중 검증 추가.

print('history:', history.history)

# 모델 평가

loss = model.evaluate(x_test, y_test)

print('loss :', loss)

# loss : [0.003264167346060276, 0.003264167346060276]

from sklearn.metrics import r2_score

pred = model.predict(x_test)

print('예측값 : ', pred[:3].flatten())

# 예측값 : [0.4591275 0.21831244 0.569612 ]

print('실제값 : ', y_test[:3])

# 실제값 : [0.38582677 0.28346457 0.51574803]

print('설명력 : ', r2_score(y_test, pred))

# 설명력 : 0.920154340793872model.fit(x_train, y_train, batch_size=, epochs=, verbose=, validation_split = 0.2) : train data를 8:2로 분리해서 학습도중 검증 추가.

주식 데이터 회귀분석

* ke9_stock.py

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Dense

from tensorflow.keras import optimizers

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

import tensorflow as tf

from sklearn.preprocessing import MinMaxScaler, minmax_scale, StandardScaler, RobustScaler

xy = np.loadtxt('https://raw.githubusercontent.com/pykwon/python/master/testdata_utf8/stockdaily.csv',\

delimiter=',', skiprows=1)

print(xy[:2], len(xy))

'''

[[8.28659973e+02 8.33450012e+02 8.28349976e+02 1.24770000e+06

8.31659973e+02]

[8.23020020e+02 8.28070007e+02 8.21655029e+02 1.59780000e+06

8.28070007e+02]] 732

'''# 정규화

scaler = MinMaxScaler(feature_range=(0, 1))

xy = scaler.fit_transform(xy)

print(xy[:3])

'''

[[0.97333581 0.97543152 1. 0.11112306 0.98831302]

[0.95690035 0.95988111 0.9803545 0.14250246 0.97785024]

[0.94789567 0.94927335 0.97250489 0.11417048 0.96645463]]

'''

x_data = xy[:, 0:-1]

y_data = xy[:, -1]

print(x_data[0], y_data[0])

# [0.97333581 0.97543152 1. 0.11112306] 0.9883130206172026

print(x_data[1], y_data[1])

# [0.95690035 0.95988111 0.9803545 0.14250246] 0.9778502390712853# 하루전 데이터로 다음날 종가 예측

x_data = np.delete(x_data, -1, 0) # 마지막행 삭제

y_data = np.delete(y_data, 0) # 0 행 삭제

print()

print('predict tomorrow')

print(x_data[0], '>=', y_data[0])

# [0.97333581 0.97543152 1. 0.11112306] >= 0.9778502390712853

model = Sequential()

model.add(Dense(input_dim=4, units=1))

model.compile(optimizer='adam', loss='mse', metrics=['mse'])

model.fit(x_data, y_data, epochs=100, verbose=2)

print(x_data[10])

# [0.88894325 0.88357424 0.90287217 0.10453527]

test = x_data[10].reshape(-1, 4)

print(test)

# [[0.88894325 0.88357424 0.90287217 0.10453527]]

print('실제값 :', y_data[10], ', 예측값 :', model.predict(test).flatten())

# 실제값 : 0.9003840704898083 , 예측값 : [0.8847432]

from sklearn.metrics import r2_score

pred = model.predict(x_data)

print('설명력 : ', r2_score(y_data, pred))

# 설명력 : 0.995010085719306# 데이터를 분리

train_size = int(len(x_data) * 0.7)

test_size = len(x_data) - train_size

print(train_size, test_size) # 511 220

x_train, x_test = x_data[0:train_size], x_data[train_size:len(x_data)]

print(x_train[:2], x_train.shape) # (511, 4)

y_train, y_test = y_data[0:train_size], y_data[train_size:len(x_data)]

print(y_train[:2], y_train.shape) # (511,)

model2 = Sequential()

model2.add(Dense(input_dim=4, units=1))

model2.compile(optimizer='adam', loss='mse', metrics=['mse'])

model2.fit(x_train, y_train, epochs=100, verbose=0)

result = model.evaluate(x_test, y_test)

print('result :', result) # result : [0.0038084371481090784, 0.0038084371481090784]

pred2 = model2.predict(x_test)

print('설명력 : ', r2_score(y_test, pred2)) # 설명력 : 0.8712214499209135

plt.plot(y_test, 'b')

plt.plot(pred2, 'r--')

plt.show()

# 머신러닝 이슈는 최적화와 일반화의 줄다리기

# 최적화 : 성능 좋은 모델 생성. 과적합 발생.

# 일반화 : 모델이 새로운 데이터에 대한 분류/예측을 잘함

boston dataset으로 주택가격 예측

* ke10_boston.py

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Dense

from tensorflow.keras import optimizers

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

import tensorflow as tf

from tensorflow.keras.datasets import boston_housing

#print(boston_housing.load_data())

(x_train, y_train), (x_test, y_test) = boston_housing.load_data()

print(x_train[:2], x_train.shape) # (404, 13)

print(y_train[:2], y_train.shape) # (404,)

print(x_test[:2], x_test.shape) # (102, 13)

print(y_test[:2], y_test.shape) # (102,)

'''

CRIM: 자치시(town) 별 1인당 범죄율

ZN: 25,000 평방피트를 초과하는 거주지역의 비율

INDUS:비소매상업지역이 점유하고 있는 토지의 비율

CHAS: 찰스강에 대한 더미변수(강의 경계에 위치한 경우는 1, 아니면 0)

NOX: 10ppm 당 농축 일산화질소

RM: 주택 1가구당 평균 방의 개수

AGE: 1940년 이전에 건축된 소유주택의 비율

DIS: 5개의 보스턴 직업센터까지의 접근성 지수

RAD: 방사형 도로까지의 접근성 지수

TAX: 10,000 달러 당 재산세율

PTRATIO: 자치시(town)별 학생/교사 비율

B: 1000(Bk-0.63)^2, 여기서 Bk는 자치시별 흑인의 비율을 말함.

LSTAT: 모집단의 하위계층의 비율(%)

MEDV: 본인 소유의 주택가격(중앙값) (단위: $1,000)

'''from sklearn.preprocessing import MinMaxScaler, minmax_scale, StandardScaler

# 표준화 : (요소값 - 평균) / 표준편차

x_train = StandardScaler().fit_transform(x_train)

x_test = StandardScaler().fit_transform(x_test)

print(x_train[:2])

'''

[[-0.27224633 -0.48361547 -0.43576161 -0.25683275 -0.1652266 -0.1764426

0.81306188 0.1166983 -0.62624905 -0.59517003 1.14850044 0.44807713

0.8252202 ]

[-0.40342651 2.99178419 -1.33391162 -0.25683275 -1.21518188 1.89434613

-1.91036058 1.24758524 -0.85646254 -0.34843254 -1.71818909 0.43190599

-1.32920239]]

'''def build_model():

model = Sequential()

model.add(Dense(64, activation='linear', input_shape = (x_train.shape[1], )))

model.add(Dense(32, activation='linear'))

model.add(Dense(1, activation='linear')) # 보통 출력수를 줄여나감.

model.compile(optimizer='adam', loss='mse', metrics=['mse'])

return model

model = build_model()

print(model.summary())# 연습 1 : trian/test로 학습. validation dataset 미사용

history = model.fit(x_train, y_train, epochs=50, batch_size=10, verbose=0)

mse_history = history.history['mse'] # loss, mse 중 mse

print('mse_history :', mse_history)

# mse_history : [548.9549560546875, 466.8479919433594, 353.4585876464844, 186.83999633789062, 58.98761749267578, 26.056533813476562, 23.167158126831055, 23.637117385864258, 23.369510650634766, 22.879520416259766, 23.390832901000977, 23.419946670532227, 23.037487030029297, 23.752803802490234, 23.961477279663086, 23.314424514770508, 23.156572341918945, 24.04509162902832, 23.13265609741211, 24.095226287841797, 23.08273696899414, 23.30631446838379, 24.038318634033203, 23.243263244628906, 23.506254196166992, 23.377840042114258, 23.529315948486328, 23.724761962890625, 23.4329891204834, 23.686052322387695, 23.25194549560547, 23.544504165649414, 23.093494415283203, 22.901500701904297, 23.991165161132812, 23.457441329956055, 24.34749412536621, 23.256059646606445, 23.843273162841797, 23.13270378112793, 24.404985427856445, 24.354494094848633, 23.51766014099121, 23.392494201660156, 23.11193084716797, 23.509197235107422, 23.29837417602539, 24.12410545349121, 23.416379928588867, 23.74490737915039]# 연습 2 : trian/test로 학습. validation dataset 사용

history = model.fit(x_train, y_train, epochs=50, batch_size=10, verbose=0,\

validation_split = 0.3)

mse_history = history.history['mse'] # loss, mse, val_loss, val_mse 중 mse

print('mse_history :', mse_history)

# mse_history : [19.48627281188965, 19.15229606628418, 18.982120513916016, 19.509700775146484, 19.484264373779297, 19.066728591918945, 20.140111923217773, 19.462392807006836, 19.258283615112305, 18.974916458129883, 20.06231117248535, 19.748247146606445, 20.13493537902832, 19.995471954345703, 19.182003021240234, 19.42215347290039, 19.571495056152344, 19.24733543395996, 19.52226448059082, 19.074302673339844, 19.558866500854492, 19.209842681884766, 18.880287170410156, 19.14659309387207, 19.033899307250977, 19.366600036621094, 18.843536376953125, 19.674291610717773, 19.239337921142578, 19.594730377197266, 19.586498260498047, 19.684917449951172, 19.49432945251465, 19.398204803466797, 19.537694931030273, 19.503393173217773, 19.27028465270996, 19.265226364135742, 19.07738494873047, 19.075668334960938, 19.237651824951172, 19.83896827697754, 18.86182403564453, 19.732463836669922, 20.0035400390625, 19.034374237060547, 18.72059440612793, 19.841144561767578, 19.51473045349121, 19.27489471435547]

val_mse_history = history.history['val_mse'] # loss, mse, val_loss, val_mse 중 val_mse

print('val_mse_history :', mse_history)

# val_mse_history : [19.911706924438477, 19.533662796020508, 20.14069366455078, 20.71445655822754, 19.561399459838867, 19.340707778930664, 19.23623275756836, 19.126638412475586, 19.64912223815918, 19.517324447631836, 20.47089958190918, 19.591028213500977, 19.35943603515625, 20.017181396484375, 19.332469940185547, 19.519393920898438, 20.045940399169922, 18.939823150634766, 20.331043243408203, 19.793170928955078, 19.281906127929688, 19.30805778503418, 18.842435836791992, 19.221630096435547, 19.322744369506836, 19.64993667602539, 19.05265998840332, 18.85285758972168, 19.07070541381836, 19.016603469848633, 19.707555770874023, 18.752607345581055, 19.066970825195312, 19.616897583007812, 19.585346221923828, 19.096216201782227, 19.127830505371094, 19.077239990234375, 19.891225814819336, 19.251203536987305, 19.305219650268555, 18.768598556518555, 19.763708114624023, 19.80074119567871, 19.371135711669922, 19.151229858398438, 19.302906036376953, 19.169986724853516, 19.26124382019043, 19.901819229125977]# 시각화

plt.plot(mse_history, 'r')

plt.plot(val_mse_history, 'b--')

plt.xlabel('epoch')

plt.ylabel('mse, val_mse')

plt.show()

from sklearn.metrics import r2_score

print('설명력 : ', r2_score(y_test, model.predict(x_test)))

# 설명력 : 0.7525586754103629

회귀분석 모델 : 자동차 연비 예측

* ke11_cars.py

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

import tensorflow as tf

from tensorflow.keras import layers

dataset = pd.read_csv('https://raw.githubusercontent.com/pykwon/python/master/testdata_utf8/auto-mpg.csv')

del dataset['car name']

print(dataset.head(2))

pd.set_option('display.max_columns', 100)

print(dataset.corr())

'''

mpg cylinders displacement weight acceleration \

mpg 1.000000 -0.775396 -0.804203 -0.831741 0.420289

cylinders -0.775396 1.000000 0.950721 0.896017 -0.505419

displacement -0.804203 0.950721 1.000000 0.932824 -0.543684

weight -0.831741 0.896017 0.932824 1.000000 -0.417457

acceleration 0.420289 -0.505419 -0.543684 -0.417457 1.000000

model year 0.579267 -0.348746 -0.370164 -0.306564 0.288137

origin 0.563450 -0.562543 -0.609409 -0.581024 0.205873

model year origin

mpg 0.579267 0.563450

cylinders -0.348746 -0.562543

displacement -0.370164 -0.609409

weight -0.306564 -0.581024

acceleration 0.288137 0.205873

model year 1.000000 0.180662

origin 0.180662 1.000000

'''

dataset.drop(['cylinders','acceleration', 'model year', 'origin'], axis='columns', inplace=True)

print()

print(dataset.head(2))

'''

mpg displacement horsepower weight

0 18.0 307.0 130 3504

1 15.0 350.0 165 3693

'''

dataset['horsepower'] = dataset['horsepower'].apply(pd.to_numeric, errors = 'coerce') # errors = 'coerce' : 에러 무시

# data 중에 ?가 있어 형변환시 NaN 발생.

print(dataset.info())

print(dataset.isnull().sum()) # horsepower 6

dataset = dataset.dropna()

print('----------------------------------------------------')

print(dataset)

import seaborn as sns

sns.pairplot(dataset[['mpg', 'displacement', 'horsepower', 'weight']], diag_kind='kde')

plt.show()

# train/test

train_dataset = dataset.sample(frac= 0.7, random_state=123)

test_dataset = dataset.drop(train_dataset.index)

print(train_dataset.shape) # (274, 4)

print(test_dataset.shape) # (118, 4)# 표준화 작업 (수식을 직접 사용)을 위한 작업

train_stat = train_dataset.describe()

print(train_stat)

#train_dataset.pop('mpg')

train_stat = train_stat.transpose()

print(train_stat)

# label : mpg

train_labels = train_dataset.pop('mpg')

print(train_labels[:2])

'''

222 17.0

247 39.4

'''

test_labels = test_dataset.pop('mpg')

print(train_dataset)

'''

displacement horsepower weight

222 260.0 110.0 4060

247 85.0 70.0 2070

136 302.0 140.0 4141

'''

print(test_labels[:2])

'''

1 15.0

2 18.0

'''

print(test_dataset)

def st_func(x):

return ((x - train_stat['mean']) / train_stat['std'])

print(st_func(10))

'''

mpg -1.706214

displacement -1.745771

horsepower -2.403940

weight -3.440126

'''

print(train_dataset[:3])

'''

displacement horsepower weight

222 260.0 110.0 4060

247 85.0 70.0 2070

136 302.0 140.0 4141

'''

print(st_func(train_dataset[:3]))

'''

displacement horsepower mpg weight

222 0.599039 0.133053 NaN 1.247890

247 -1.042328 -0.881744 NaN -1.055604

136 0.992967 0.894151 NaN 1.341651

'''

st_train_data = st_func(train_dataset) # train feature

st_test_data = st_func(test_dataset) # test feature

st_train_data.pop('mpg')

st_test_data.pop('mpg')

print(st_train_data)

print(st_test_data)# 모델에 적용할 dataset 준비완료

# Model

def build_model():

network = tf.keras.Sequential([

layers.Dense(units=64, input_shape=[3], activation='linear'),

layers.Dense(64, activation='linear'), # relu

layers.Dense(1, activation='linear')

])

#opti = tf.keras.optimizers.RMSprop(0.01)

opti = tf.keras.optimizers.Adam(0.01)

network.compile(optimizer=opti, loss='mean_squared_error', \

metrics=['mean_absolute_error', 'mean_squared_error'])

return network

print(build_model().summary()) # Total params: 4,481

# fit() 전에 모델을 실행해볼수도 있다.

model = build_model()

print(st_train_data[:1])

print(model.predict(st_train_data[:1])) # 결과 무시# 훈련

epochs = 10

# 학습 조기 종료

early_stop = tf.keras.callbacks.EarlyStopping(monitor='loss', patience=3)

history = model.fit(st_train_data, train_labels, batch_size=32,\

epochs=epochs, validation_split=0.2, verbose=1)

df = pd.DataFrame(history.history)

print(df.head(3))

print(df.columns)tf.keras.callbacks.EarlyStopping(monitor='loss', patience=3) : 학습 조기 종료

# 시각화

def plot_history(history):

hist = pd.DataFrame(history.history)

hist['epoch'] = history.epoch

plt.figure(figsize = (8,12))

plt.subplot(2, 1, 1)

plt.xlabel('epoch')

plt.ylabel('Mean Abs Error[MPG]')

plt.plot(hist['epoch'], hist['mean_absolute_error'], label='train error')

plt.plot(hist['epoch'], hist['val_mean_absolute_error'], label='val error')

#plt.ylim([0, 5])

plt.legend()

plt.subplot(2, 1, 2)

plt.xlabel('epoch')

plt.ylabel('Mean Squared Error[MPG]')

plt.plot(hist['epoch'], hist['mean_squared_error'], label='train error')

plt.plot(hist['epoch'], hist['val_mean_squared_error'], label='error')

#plt.ylim([0, 20])

plt.legend()

plt.show()

plot_history(history)

'BACK END > Deep Learning' 카테고리의 다른 글

| [딥러닝] Tensorflow - 이미지 분류 (0) | 2021.04.01 |

|---|---|

| [딥러닝] Keras - Logistic (0) | 2021.03.25 |

| [딥러닝] TensorFlow (0) | 2021.03.22 |

| [딥러닝] TensorFlow 환경설정 (0) | 2021.03.22 |

| [딥러닝] DBScan (0) | 2021.03.22 |